Benjamin Perkin’s parents received a troubling call…

A man who said he was Perkin’s lawyer said their son was in jail for killing a US diplomat in a car crash.

He then put Perkin on the phone, who told his parents he loved them and that he needed money for legal fees.

The lawyer gave them instructions to wire $15,000 before a court hearing started later that day.

Perkin’s parents rushed to the nearest bank, then sent the money through a bitcoin ATM.

But that evening, the real Perkin called…

Only for his parents to realize they’d been scammed.

- The scammers used AI to “clone” Perkin’s voice.

They simply took snippets of audio from the internet and fed it through an artificial intelligence (AI) program.

It used to take hours of recordings to stitch together audio that resembled a person’s voice.

But thanks to the leap AI’s made this past year, you can now clone any voice from a short, 30-second clip.

Elon Musk, Joe Biden, the Rock… anyone’s voice is fair game.

Dozens of these AI speech apps exist today. And many are free.

While most folks use them to record funny videos—like Trump and Biden playing video games—they’re also the perfect tool for criminals.

Perkin had posted a video to YouTube talking about his snowmobiling hobby. It’s probably what got his family into trouble.

Scammers can also use Instagram, TikTok, and other social media posts against you.

- Online scammers have a new partner in crime…

As we’ve shown you, the popular AI chatbot is helping many businesses become more efficient. That includes criminal organizations.

In a nutshell, ChatGPT is making cybercrime better, faster, and cheaper.

For example, criminals are using ChatGPT to write sophisticated phishing emails. Oftentimes, these scammers come from third-world countries. English isn’t their first language, which can tip off their victims that something’s not right.

With ChatGPT, this is no longer an issue. Criminals can pretend to be your bank, credit card company, or insurance provider—in perfect English—and you likely can’t tell the difference.

ChatGPT can also talk with victims in real time and convince them to share sensitive information or send money. And it can do it to thousands of folks simultaneously.

Hackers are using ChatGPT to write computer code designed to spy on you and steal sensitive data. Simply clicking on a link in an email could be enough to give hackers access to your device.

ChatGPT’s owner, OpenAI, claims it programmed the chatbot so criminals can’t use it. I’m sure they tried… but they failed. And criminals have other ChatGPT-like AI tools available to them on the internet.

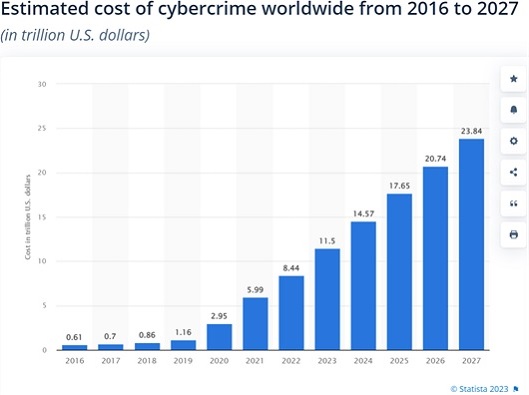

In short, AI models are fueling the rapid advancement of cybercrime, which Statista projects will cost $24 trillion annually by 2027…

Source: Statista

Source: Statista

For context, cybercrimes cost less than a trillion dollars just five years ago.

The problem is, the more digital the world becomes, the more ways cybercriminals will have to attack you.

I remember when each household had just one PC. Today, the average US household has 20 connected devices. iPhones, iPads, laptops, smartwatches, TVs, security cameras, temperature regulators, even the car in your garage…

And the number of connected devices will only grow. Statista estimates they’ll double worldwide by 2030.

- These are troubling statistics. But they offer one of the surest investment opportunities today…

Cybersecurity.

Top consulting firm McKinsey says cybersecurity spending could grow to $2 trillion, up from around $170 billion today.

That means cyber firms are set to rake in record amounts of money over the next decade.

In fact, I expect cybersecurity stocks to outperform every other software group over the next five years.

An easy way to profit from this megatrend is by investing in an ETF like the First Trust Nasdaq Cybersecurity ETF (CIBR), which is made up of the largest cybersecurity stocks.

Or you can buy my favorite cybersecurity stock, which I recommend in my flagship Disruption Investor advisory. You can “test drive” Disruption Investor risk-free for 30 days at this link.

- What happened to Perkin’s parents can happen to anyone… and it’s going to happen more and more.

If you receive a call from a loved one in distress, keep your cool.

Ask a personal question to verify who you’re really talking to. It can be as simple as what you had for lunch the last time you were together.

You might even want to agree on a “password” with your close family members in case you’re ever in this situation. If something seems fishy, ask for the family password.

Also, avoid sending money to unverified bank accounts or via cryptocurrency. It’s another telltale sign of a scam.

Stephen McBride

Chief Analyst, RiskHedge

PS: Have you ever gotten scammed? If so, do you have any other tips for RiskHedge readers? Share your story with me at stephen@riskhedge.com.